Explore how Agile Data Engine powers data teams

- 01Simplified architecture, less time on maintenance.

- 02Built-in team productivity, more value for business.

- 03Governed flexibility.

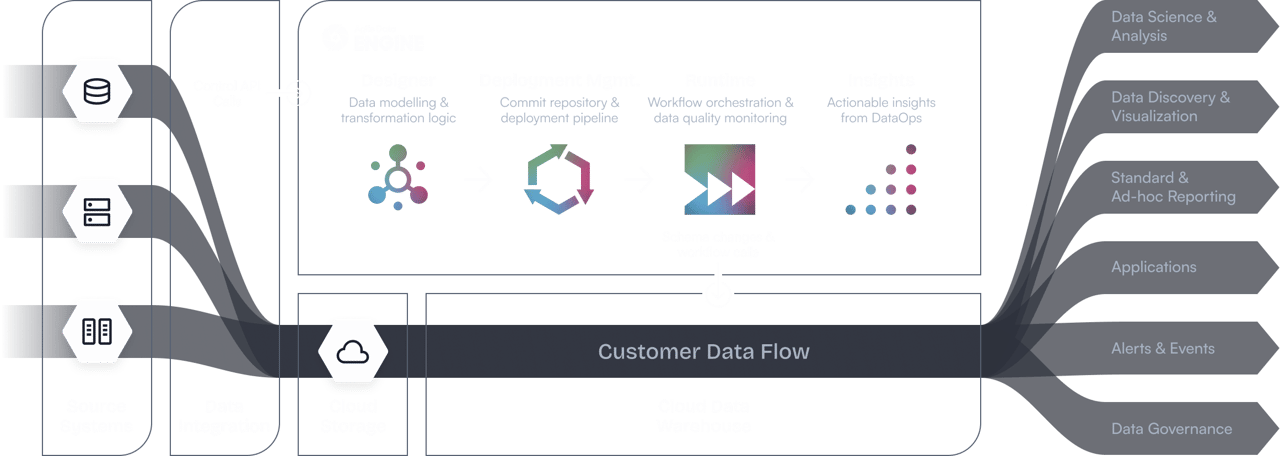

Agile Data Engine is a low-code DataOps platform for designing, deploying and operating cloud data warehouses.

Agile Data Engine transforms how enterprises build, run, and scale data warehouses and lakehouses. Turn complexity into clarity, and unscalable efforts into resilient data capability.

Agile Data Engine combines a unique set of capabilities into one platform.

| Agile Data Engine | DW Automation | ETL Software | SQL GUI Tools | |

|---|---|---|---|---|

| Data extraction | ||||

| Data loading | ||||

| Data transformations | ||||

| Data modeling | ||||

| Metadata-driven change detection and automation | ||||

| Built-in automatic schema changes | ||||

| Built-in continuous delivery pipeline | ||||

| Workflow scheduling and orchestration | ||||

| Built-in near real-time workflows capabilities | ||||

| Data testing integrated with workflows | ||||

| Date engineering observability | ||||

| Simultaneous multi-cloud support | ||||

| Cloud native and fully managed (SaaS) | ||||

| Data stays in customer cloud (data security) |